just some refactoring bits towards replacing **util.OptionalBool** with

**optional.Option[bool]**

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Since `modules/context` has to depend on `models` and many other

packages, it should be moved from `modules/context` to

`services/context` according to design principles. There is no logic

code change on this PR, only move packages.

- Move `code.gitea.io/gitea/modules/context` to

`code.gitea.io/gitea/services/context`

- Move `code.gitea.io/gitea/modules/contexttest` to

`code.gitea.io/gitea/services/contexttest` because of depending on

context

- Move `code.gitea.io/gitea/modules/upload` to

`code.gitea.io/gitea/services/context/upload` because of depending on

context

Adds a new API `/repos/{owner}/{repo}/commits/{sha}/pull` that allows

you to get the merged PR associated to a commit.

---------

Co-authored-by: 6543 <6543@obermui.de>

Clarify when "string" should be used (and be escaped), and when

"template.HTML" should be used (no need to escape)

And help PRs like #29059 , to render the error messages correctly.

With this option, it is possible to require a linear commit history with

the following benefits over the next best option `Rebase+fast-forward`:

The original commits continue existing, with the original signatures

continuing to stay valid instead of being rewritten, there is no merge

commit, and reverting commits becomes easier.

Closes#24906

The old code `GetTemplatesFromDefaultBranch(...) ([]*api.IssueTemplate,

map[string]error)` doesn't really follow Golang's habits, then the

second returned value might be misused. For example, the API function

`GetIssueTemplates` incorrectly checked the second returned value and

always responds 500 error.

This PR refactors GetTemplatesFromDefaultBranch to

ParseTemplatesFromDefaultBranch and clarifies its behavior, and fixes the

API endpoint bug, and adds some tests.

And by the way, add proper prefix `X-` for the header generated in

`checkDeprecatedAuthMethods`, because non-standard HTTP headers should

have `X-` prefix, and it is also consistent with the new code in

`GetIssueTemplates`

In #28691, schedule plans will be deleted when a repo's actions unit is

disabled. But when the unit is enabled, the schedule plans won't be

created again.

This PR fixes the bug. The schedule plans will be created again when the

actions unit is re-enabled

## Purpose

This is a refactor toward building an abstraction over managing git

repositories.

Afterwards, it does not matter anymore if they are stored on the local

disk or somewhere remote.

## What this PR changes

We used `git.OpenRepository` everywhere previously.

Now, we should split them into two distinct functions:

Firstly, there are temporary repositories which do not change:

```go

git.OpenRepository(ctx, diskPath)

```

Gitea managed repositories having a record in the database in the

`repository` table are moved into the new package `gitrepo`:

```go

gitrepo.OpenRepository(ctx, repo_model.Repo)

```

Why is `repo_model.Repository` the second parameter instead of file

path?

Because then we can easily adapt our repository storage strategy.

The repositories can be stored locally, however, they could just as well

be stored on a remote server.

## Further changes in other PRs

- A Git Command wrapper on package `gitrepo` could be created. i.e.

`NewCommand(ctx, repo_model.Repository, commands...)`. `git.RunOpts{Dir:

repo.RepoPath()}`, the directory should be empty before invoking this

method and it can be filled in the function only. #28940

- Remove the `RepoPath()`/`WikiPath()` functions to reduce the

possibility of mistakes.

---------

Co-authored-by: delvh <dev.lh@web.de>

Currently, the `updateMirror` function which update the mirror interval

and enable prune properties is only executed by the `Edit` function. But

it is only triggered if `opts.MirrorInterval` is not null, even if

`opts.EnablePrune` is not null.

With this patch, it is now possible to update the enable_prune property

with a patch request without modifying the mirror_interval.

## Example request with httpie

### Currently:

**Does nothing**

```bash

http PATCH https://gitea.your-server/api/v1/repos/myOrg/myRepo "enable_prune:=false" -A bearer -a $gitea_token

```

**Updates both properties**

```bash

http PATCH https://gitea.your-server/api/v1/repos/myOrg/myRepo "enable_prune:=false" "mirror_interval=10m" -A bearer -a $gitea_token

```

### With the patch

**Updates enable_prune only**

```bash

http PATCH https://gitea.your-server/api/v1/repos/myOrg/myRepo "enable_prune:=false" -A bearer -a $gitea_token

```

Sometimes you need to work on a feature which depends on another (unmerged) feature.

In this case, you may create a PR based on that feature instead of the main branch.

Currently, such PRs will be closed without the possibility to reopen in case the parent feature is merged and its branch is deleted.

Automatic target branch change make life a lot easier in such cases.

Github and Bitbucket behave in such way.

Example:

$PR_1$: main <- feature1

$PR_2$: feature1 <- feature2

Currently, merging $PR_1$ and deleting its branch leads to $PR_2$ being closed without the possibility to reopen.

This is both annoying and loses the review history when you open a new PR.

With this change, $PR_2$ will change its target branch to main ($PR_2$: main <- feature2) after $PR_1$ has been merged and its branch has been deleted.

This behavior is enabled by default but can be disabled.

For security reasons, this target branch change will not be executed when merging PRs targeting another repo.

Fixes#27062Fixes#18408

---------

Co-authored-by: Denys Konovalov <kontakt@denyskon.de>

Co-authored-by: delvh <dev.lh@web.de>

Fixes#27114.

* In Gitea 1.12 (#9532), a "dismiss stale approvals" branch protection

setting was introduced, for ignoring stale reviews when verifying the

approval count of a pull request.

* In Gitea 1.14 (#12674), the "dismiss review" feature was added.

* This caused confusion with users (#25858), as "dismiss" now means 2

different things.

* In Gitea 1.20 (#25882), the behavior of the "dismiss stale approvals"

branch protection was modified to actually dismiss the stale review.

For some users this new behavior of dismissing the stale reviews is not

desirable.

So this PR reintroduces the old behavior as a new "ignore stale

approvals" branch protection setting.

---------

Co-authored-by: delvh <dev.lh@web.de>

Fix#28157

This PR fix the possible bugs about actions schedule.

## The Changes

- Move `UpdateRepositoryUnit` and `SetRepoDefaultBranch` from models to

service layer

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when actions unit has been disabled

or global disabled.

- Remove schedules plan from database and cancel waiting & running

schedules tasks in this repository when default branch changed.

Fix#27722Fix#27357Fix#25837

1. Fix the typo `BlockingByDependenciesNotPermitted`, which causes the

`not permitted message` not to show. The correct one is `Blocking` or

`BlockedBy`

2. Rewrite the perm check. The perm check uses a very tricky way to

avoid duplicate checks for a slice of issues, which is confusing. In

fact, it's also the reason causing the bug. It uses `lastRepoID` and

`lastPerm` to avoid duplicate checks, but forgets to assign the

`lastPerm` at the end of the code block. So I rewrote this to avoid this

trick.

3. It also reuses the `blocks` slice, which is even more confusing. So I

rewrote this too.

Introduce the new generic deletion methods

- `func DeleteByID[T any](ctx context.Context, id int64) (int64, error)`

- `func DeleteByIDs[T any](ctx context.Context, ids ...int64) error`

- `func Delete[T any](ctx context.Context, opts FindOptions) (int64,

error)`

So, we no longer need any specific deletion method and can just use

the generic ones instead.

Replacement of #28450Closes#28450

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Remove `ObjectFormatID`

- Remove function `ObjectFormatFromID`.

- Use `Sha1ObjectFormat` directly but not a pointer because it's an

empty struct.

- Store `ObjectFormatName` in `repository` struct

Refactor Hash interfaces and centralize hash function. This will allow

easier introduction of different hash function later on.

This forms the "no-op" part of the SHA256 enablement patch.

Fix#28056

This PR will check whether the repo has zero branch when pushing a

branch. If that, it means this repository hasn't been synced.

The reason caused that is after user upgrade from v1.20 -> v1.21, he

just push branches without visit the repository user interface. Because

all repositories routers will check whether a branches sync is necessary

but push has not such check.

For every repository, it has two states, synced or not synced. If there

is zero branch for a repository, then it will be assumed as non-sync

state. Otherwise, it's synced state. So if we think it's synced, we just

need to update branch/insert new branch. Otherwise do a full sync. So

that, for every push, there will be almost no extra load added. It's

high performance than yours.

For the implementation, we in fact will try to update the branch first,

if updated success with affect records > 0, then all are done. Because

that means the branch has been in the database. If no record is

affected, that means the branch does not exist in database. So there are

two possibilities. One is this is a new branch, then we just need to

insert the record. Another is the branches haven't been synced, then we

need to sync all the branches into database.

Part of #27065

This PR touches functions used in templates. As templates are not static

typed, errors are harder to find, but I hope I catch it all. I think

some tests from other persons do not hurt.

This PR removed `unittest.MainTest` the second parameter

`TestOptions.GiteaRoot`. Now it detects the root directory by current

working directory.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

This PR adds a new field `RemoteAddress` to both mirror types which

contains the sanitized remote address for easier (database) access to

that information. Will be used in the audit PR if merged.

Part of #27065

This reduces the usage of `db.DefaultContext`. I think I've got enough

files for the first PR. When this is merged, I will continue working on

this.

Considering how many files this PR affect, I hope it won't take to long

to merge, so I don't end up in the merge conflict hell.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Most middleware throw a 404 in case something is not found e.g. a Repo

that is not existing. But most API endpoints don't include the 404

response in their documentation. This PR changes this.

Fixes#24944

Since a user with write permissions for issues can add attachments to an

issue via the the web interface, the user should also be able to add

attachments via the API

- Modify the `CreateOrUpdateSecret` function in `api.go` to include a

`Delete` operation for the secret

- Modify the `DeleteOrgSecret` function in `action.go` to include a

`DeleteSecret` operation for the organization

- Modify the `DeleteSecret` function in `action.go` to include a

`DeleteSecret` operation for the repository

- Modify the `v1_json.tmpl` template file to update the `operationId`

and `summary` for the `deleteSecret` operation in both the organization

and repository sections

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Just like `models/unittest`, the testing helper functions should be in a

separate package: `contexttest`

And complete the TODO:

> // TODO: move this function to other packages, because it depends on

"models" package

spec:

https://docs.github.com/en/rest/actions/secrets?apiVersion=2022-11-28#create-or-update-a-repository-secret

- Add a new route for creating or updating a secret value in a

repository

- Create a new file `routers/api/v1/repo/action.go` with the

implementation of the `CreateOrUpdateSecret` function

- Update the Swagger documentation for the `updateRepoSecret` operation

in the `v1_json.tmpl` template file

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Co-authored-by: Giteabot <teabot@gitea.io>

Fixes: #26333.

Previously, this endpoint only updates the `StatusCheckContexts` field

when `EnableStatusCheck==true`, which makes it impossible to clear the

array otherwise.

This patch uses slice `nil`-ness to decide whether to update the list of

checks. The field is ignored when either the client explicitly passes in

a null, or just omits the field from the json ([which causes

`json.Unmarshal` to leave the struct field

unchanged](https://go.dev/play/p/Z2XHOILuB1Q)). I think this is a better

measure of intent than whether the `EnableStatusCheck` flag was set,

because it matches the semantics of other field types.

Also adds a test case. I noticed that [`testAPIEditBranchProtection`

only checks the branch

name](c1c83dbaec/tests/integration/api_branch_test.go (L68))

and no other fields, so I added some extra `GET` calls and specific

checks to make sure the fields are changing properly.

I added those checks the existing integration test; is that the right

place for it?

## Archived labels

This adds the structure to allow for archived labels.

Archived labels are, just like closed milestones or projects, a medium to hide information without deleting it.

It is especially useful if there are outdated labels that should no longer be used without deleting the label entirely.

## Changes

1. UI and API have been equipped with the support to mark a label as archived

2. The time when a label has been archived will be stored in the DB

## Outsourced for the future

There's no special handling for archived labels at the moment.

This will be done in the future.

## Screenshots

Part of https://github.com/go-gitea/gitea/issues/25237

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix#24662.

Replace #24822 and #25708 (although it has been merged)

## Background

In the past, Gitea supported issue searching with a keyword and

conditions in a less efficient way. It worked by searching for issues

with the keyword and obtaining limited IDs (as it is heavy to get all)

on the indexer (bleve/elasticsearch/meilisearch), and then querying with

conditions on the database to find a subset of the found IDs. This is

why the results could be incomplete.

To solve this issue, we need to store all fields that could be used as

conditions in the indexer and support both keyword and additional

conditions when searching with the indexer.

## Major changes

- Redefine `IndexerData` to include all fields that could be used as

filter conditions.

- Refactor `Search(ctx context.Context, kw string, repoIDs []int64,

limit, start int, state string)` to `Search(ctx context.Context, options

*SearchOptions)`, so it supports more conditions now.

- Change the data type stored in `issueIndexerQueue`. Use

`IndexerMetadata` instead of `IndexerData` in case the data has been

updated while it is in the queue. This also reduces the storage size of

the queue.

- Enhance searching with Bleve/Elasticsearch/Meilisearch, make them

fully support `SearchOptions`. Also, update the data versions.

- Keep most logic of database indexer, but remove

`issues.SearchIssueIDsByKeyword` in `models` to avoid confusion where is

the entry point to search issues.

- Start a Meilisearch instance to test it in unit tests.

- Add unit tests with almost full coverage to test

Bleve/Elasticsearch/Meilisearch indexer.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

To avoid deadlock problem, almost database related functions should be

have ctx as the first parameter.

This PR do a refactor for some of these functions.

Before: the concept "Content string" is used everywhere. It has some

problems:

1. Sometimes it means "base64 encoded content", sometimes it means "raw

binary content"

2. It doesn't work with large files, eg: uploading a 1G LFS file would

make Gitea process OOM

This PR does the refactoring: use "ContentReader" / "ContentBase64"

instead of "Content"

This PR is not breaking because the key in API JSON is still "content":

`` ContentBase64 string `json:"content"` ``

A couple of notes:

* Future changes should refactor arguments into a struct

* This filtering only is supported by meilisearch right now

* Issue index number is bumped which will cause a re-index

Fix#25558

Extract from #22743

This PR added a repository's check when creating/deleting branches via

API. Mirror repository and archive repository cannot do that.

This adds an API for uploading and Deleting Avatars for of Users, Repos

and Organisations. I'm not sure, if this should also be added to the

Admin API.

Resolves#25344

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Related #14180

Related #25233

Related #22639Close#19786

Related #12763

This PR will change all the branches retrieve method from reading git

data to read database to reduce git read operations.

- [x] Sync git branches information into database when push git data

- [x] Create a new table `Branch`, merge some columns of `DeletedBranch`

into `Branch` table and drop the table `DeletedBranch`.

- [x] Read `Branch` table when visit `code` -> `branch` page

- [x] Read `Branch` table when list branch names in `code` page dropdown

- [x] Read `Branch` table when list git ref compare page

- [x] Provide a button in admin page to manually sync all branches.

- [x] Sync branches if repository is not empty but database branches are

empty when visiting pages with branches list

- [x] Use `commit_time desc` as the default FindBranch order by to keep

consistent as before and deleted branches will be always at the end.

---------

Co-authored-by: Jason Song <i@wolfogre.com>

In the process of doing a bit of automation via the API, we've

discovered a _small_ issue in the Swagger definition. We tried to create

a push mirror for a repository, but our generated client raised an

exception due to an unexpected status code.

When looking at this function:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L236-L240)

We see it defines `201 - Created` as response:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L260-L262)

But it actually returns `200 - OK`:

3c7f5ed7b5/routers/api/v1/repo/mirror.go (L373)

So I've just updated the Swagger definitions to match the code😀

---------

Co-authored-by: Giteabot <teabot@gitea.io>

1. The "web" package shouldn't depends on "modules/context" package,

instead, let each "web context" register themselves to the "web"

package.

2. The old Init/Free doesn't make sense, so simplify it

* The ctx in "Init(ctx)" is never used, and shouldn't be used that way

* The "Free" is never called and shouldn't be called because the SSPI

instance is shared

---------

Co-authored-by: Giteabot <teabot@gitea.io>

Follow up #22405Fix#20703

This PR rewrites storage configuration read sequences with some breaks

and tests. It becomes more strict than before and also fixed some

inherit problems.

- Move storage's MinioConfig struct into setting, so after the

configuration loading, the values will be stored into the struct but not

still on some section.

- All storages configurations should be stored on one section,

configuration items cannot be overrided by multiple sections. The

prioioty of configuration is `[attachment]` > `[storage.attachments]` |

`[storage.customized]` > `[storage]` > `default`

- For extra override configuration items, currently are `SERVE_DIRECT`,

`MINIO_BASE_PATH`, `MINIO_BUCKET`, which could be configured in another

section. The prioioty of the override configuration is `[attachment]` >

`[storage.attachments]` > `default`.

- Add more tests for storages configurations.

- Update the storage documentations.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fixes some issues with the swagger documentation for the new multiple

files API endpoint (#24887) which were overlooked when submitting the

original PR:

1. add some missing parameter descriptions

2. set correct `required` option for required parameters

3. change endpoint description to match it full functionality (every

kind of file modification is supported, not just creating and updating)

This addressees some things from #24406 that came up after the PR was

merged. Mostly from @delvh.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: delvh <dev.lh@web.de>

This PR creates an API endpoint for creating/updating/deleting multiple

files in one API call similar to the solution provided by

[GitLab](https://docs.gitlab.com/ee/api/commits.html#create-a-commit-with-multiple-files-and-actions).

To archive this, the CreateOrUpdateRepoFile and DeleteRepoFIle functions

in files service are unified into one function supporting multiple files

and actions.

Resolves#14619

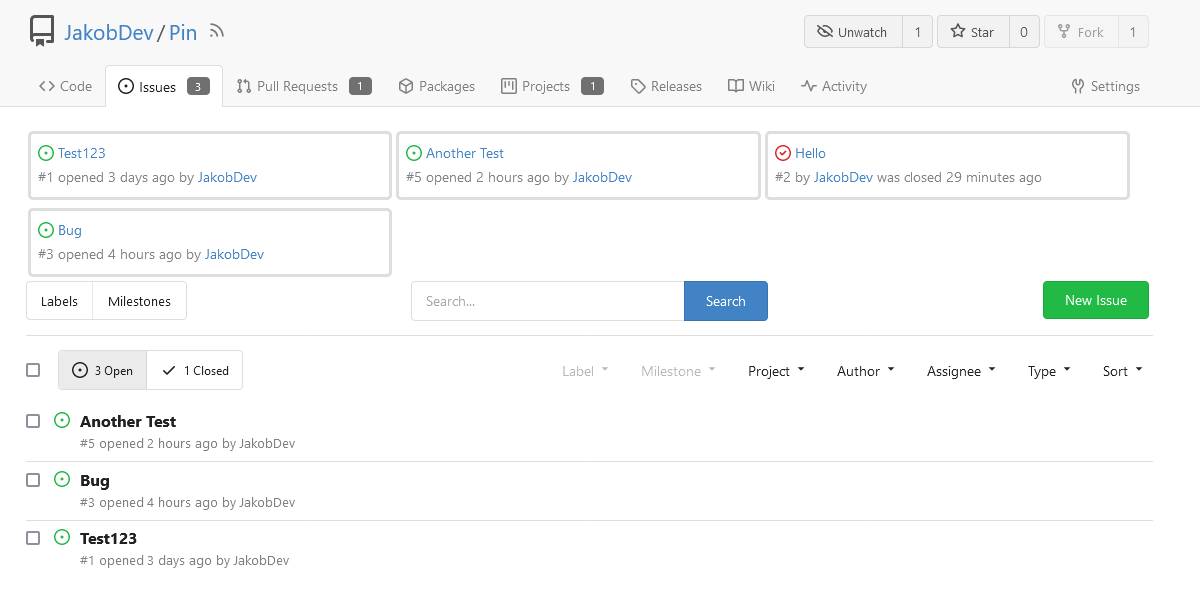

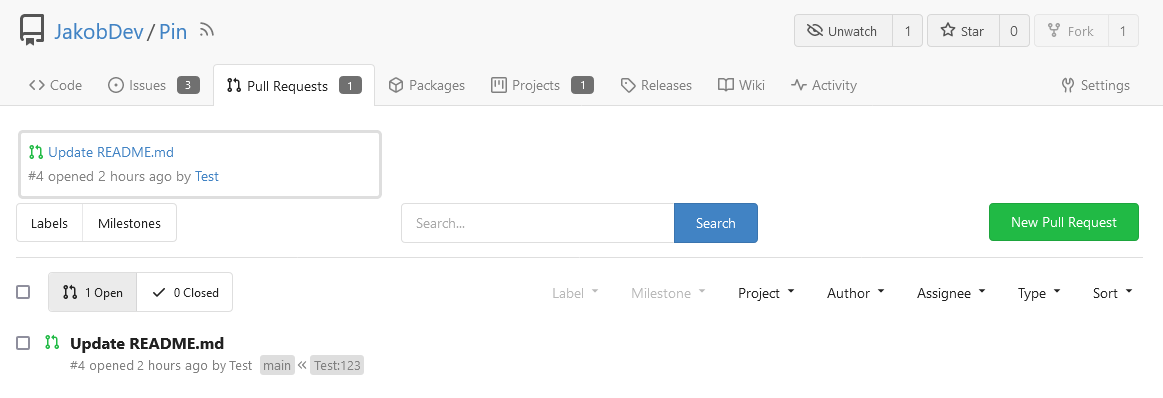

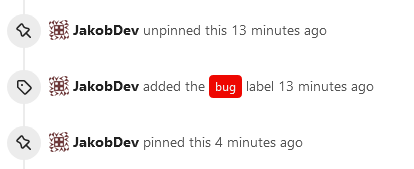

This adds the ability to pin important Issues and Pull Requests. You can

also move pinned Issues around to change their Position. Resolves#2175.

## Screenshots

The Design was mostly copied from the Projects Board.

## Implementation

This uses a new `pin_order` Column in the `issue` table. If the value is

set to 0, the Issue is not pinned. If it's set to a bigger value, the

value is the Position. 1 means it's the first pinned Issue, 2 means it's

the second one etc. This is dived into Issues and Pull requests for each

Repo.

## TODO

- [x] You can currently pin as many Issues as you want. Maybe we should

add a Limit, which is configurable. GitHub uses 3, but I prefer 6, as

this is better for bigger Projects, but I'm open for suggestions.

- [x] Pin and Unpin events need to be added to the Issue history.

- [x] Tests

- [x] Migration

**The feature itself is currently fully working, so tester who may find

weird edge cases are very welcome!**

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Giteabot <teabot@gitea.io>

Replace #16455Close#21803

Mixing different Gitea contexts together causes some problems:

1. Unable to respond proper content when error occurs, eg: Web should

respond HTML while API should respond JSON

2. Unclear dependency, eg: it's unclear when Context is used in

APIContext, which fields should be initialized, which methods are

necessary.

To make things clear, this PR introduces a Base context, it only

provides basic Req/Resp/Data features.

This PR mainly moves code. There are still many legacy problems and

TODOs in code, leave unrelated changes to future PRs.

This PR

- [x] Move some functions from `issues.go` to `issue_stats.go` and

`issue_label.go`

- [x] Remove duplicated issue options `UserIssueStatsOption` to keep

only one `IssuesOptions`

#### Added

- API: Create a branch directly from commit on the create branch API

- Added `old_ref_name` parameter to allow creating a new branch from a

specific commit, tag, or branch.

- Deprecated `old_branch_name` parameter in favor of the new

`old_ref_name` parameter.

---------

Co-authored-by: silverwind <me@silverwind.io>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

The `GetAllCommits` endpoint can be pretty slow, especially in repos

with a lot of commits. The issue is that it spends a lot of time

calculating information that may not be useful/needed by the user.

The `stat` param was previously added in #21337 to address this, by

allowing the user to disable the calculating stats for each commit. But

this has two issues:

1. The name `stat` is rather misleading, because disabling `stat`

disables the Stat **and** Files. This should be separated out into two

different params, because getting a list of affected files is much less

expensive than calculating the stats

2. There's still other costly information provided that the user may not

need, such as `Verification`

This PR, adds two parameters to the endpoint, `files` and `verification`

to allow the user to explicitly disable this information when listing

commits. The default behavior is true.

1. Remove unused fields/methods in web context.

2. Make callers call target function directly instead of the light

wrapper like "IsUserRepoReaderSpecific"

3. The "issue template" code shouldn't be put in the "modules/context"

package, so move them to the service package.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

The "modules/context.go" is too large to maintain.

This PR splits it to separate files, eg: context_request.go,

context_response.go, context_serve.go

This PR will help:

1. The future refactoring for Gitea's web context (eg: simplify the context)

2. Introduce proper "range request" support

3. Introduce context function

This PR only moves code, doesn't change any logic.

Due to #24409 , we can now specify '--not' when getting all commits from

a repo to exclude commits from a different branch.

When I wrote that PR, I forgot to also update the code that counts the

number of commits in the repo. So now, if the --not option is used, it

may return too many commits, which can indicate that another page of

data is available when it is not.

This PR passes --not to the commands that count the number of commits in

a repo

Don't remember why the previous decision that `Code` and `Release` are

non-disable units globally. Since now every unit include `Code` could be

disabled, maybe we should have a new rule that the repo should have at

least one unit. So any unit could be disabled.

Fixes#20960Fixes#7525

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: yp05327 <576951401@qq.com>

On the @Forgejo instance of Codeberg, we discovered that forking a repo

which is already forked now returns a 500 Internal Server Error, which

is unexpected. This is an attempt at fixing this.

The error message in the log:

~~~

2023/05/02 08:36:30 .../api/v1/repo/fork.go:147:CreateFork() [E]

[6450cb8e-113] ForkRepository: repository is already forked by user

[uname: ...., repo path: .../..., fork path: .../...]

~~~

The service that is used for forking returns a custom error message

which is not checked against.

About the order of options:

The case that the fork already exists should be more common, followed by

the case that a repo with the same name already exists for other

reasons. The case that the global repo limit is hit is probably not the

likeliest.

Co-authored-by: Otto Richter <otto@codeberg.org>

Co-authored-by: Giteabot <teabot@gitea.io>