Import and inference INT8 quantized TFLite model #23409

### Pull Request Readiness Checklist

* Support quantized TFLite models

* Enable fused activations (FP32, INT8)

**Merge with extra**: https://github.com/opencv/opencv_extra/pull/1048

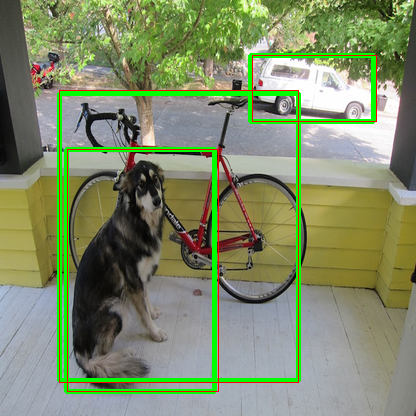

on the image, green boxes are from TFLite and red boxes from OpenCV

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Fix ONNX parser for single-layer LSTM hidden and cell states #23475

### Fix ONNX parser for single-layer LSTM hidden and cell states

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

This PR addresses #21118 [issue](https://github.com/opencv/opencv/issues/21118). The problem is that the ONNX parser is unable to read the hidden state and cell state for single-layer LSTMs. This PR fixes the issue by updating the parser to correctly read hidden and cell states.

DNN: Add New API blobFromImageParam #22750

The purpose of this PR:

1. Add new API `blobFromImageParam` to extend `blobFromImage` API. It can support the different data layout (NCHW or NHWC), and letter_box.

2. ~~`blobFromImage` can output `CV_16F`~~

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

dnn: Support more operators in CANN backend #23401

This PR adds the support of following layers:

- [x] Sub

- [x] PRelu

- [x] DeConv

- [x] Also warn users if backend is switched back to default if some of the layers are not supported.

- [ ] [Dropped] LSTM: some hacks (adding layers) were introduced which makes it even harder to build the graph for CANN backend.

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Added LSTM and GRU tests for various batch and input length sizes #23501

Added tests with various sequence length and batch sizes

Test data: https://github.com/opencv/opencv_extra/pull/1057

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Fix identifying initializers in ONNX graph simplification #23296

Fixes https://github.com/opencv/opencv/issues/23295

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Propagate inputs info for ONNX and TFLite models

### Pull Request Readiness Checklist

Needed for generic applications such as benchmarking pipelines. So OpenCV can tell about the default input shapes specified in the models.

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

4 failed tests in open_test_dnn listed below:

* Test_Caffe_layers.Conv_Elu/0, where GetParam() = OCV/CPU

* Test_ONNX_layers.ConvResizePool1d/0, where GetParam() = OCV/CPU

* Test_TensorFlow_layers.tf_reshape_nhwc/0, where GetParam() = OCV/CPU

* Test_Torch_layers.net_inception_block/0, where GetParam() = OCV/CPU

In winofunc_AtXA_8x8_f32 and winofunc_BtXB_8x8_f32

implementation, incorrect input parameters cause tests failure.

Add four new different variables for the last four input parameters of

v_transpose4x4 to fix bugs, and update related comments.

Signed-off-by: tingbo.liao <tingbo.liao@starfivetech.com>

Related issue: https://github.com/opencv/opencv_zoo/issues/136

Features added:

- Support operators with multiple output: ONNX Split.

- Support Slice without steps.

Bugs fixed:

- Wrong settings in ClipByValue (Relu6).

- Wrong calculation of pads in convolution layer (It is wrong generally but only fixed specifically for CANN for now).

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

dnn: add layer normalization for vision transformers

* add layer norm onnx parser, impl and tests

* add onnx graph simplifier for layer norm expanded

* handle the case when constants are of type Initializer

* add test case for layer norm expanded with initializers

* use CV_Assert & CV_CheckType in place of CV_Assert_N; use forward_fallback for OCL_FP16

* use const ref / ref in parameters of invoker::run; extract inner const if from nested loop; use size_t in place of ull

* template hasBias

* remove trailing whitespace

* use pointer parameter with null check; move normSize division & mean_square division outside of loop; use std::max to ensure positive value before std::sqrt

* refactor implementation, optimize parallel_for

* disable layer norm expanded

* remove the removal of layer norm optional outputs