More accurate pinhole camera calibration with imperfect planar target (#12772) 43 commits: * Add derivatives with respect to object points Add an output parameter to calculate derivatives of image points with respect to 3D coordinates of object points. The output jacobian matrix is a 2Nx3N matrix where N is the number of points. This commit introduces incompatibility to old function signature. * Set zero for dpdo matrix before using dpdo is a sparse matrix with only non-zero value close to major diagonal. Set it to zero because only elements near major diagonal are computed. * Add jacobian columns to projectPoints() The output jacobian matrix of derivatives with respect to coordinates of 3D object points are added. This might break callers who assume the columns of jacobian matrix. * Adapt test code to updated project functions The test cases for projectPoints() and cvProjectPoints2() are updated to fit new function signatures. * Add accuracy test code for dpdo * Add badarg test for dpdo * Add new enum item for new calibration method CALIB_RELEASE_OBJECT is used to whether to release 3D coordinates of object points. The method was proposed in: K. H. Strobl and G. Hirzinger. "More Accurate Pinhole Camera Calibration with Imperfect Planar Target". In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2011), 1st IEEE Workshop on Challenges and Opportunities in Robot Perception, Barcelona, Spain, pp. 1068-1075, November 2011. * Add releasing object method into internal function It's a simple extension of the standard calibration scheme. We choose to fix the first and last object point and a user-selected fixed point. * Add interfaces for extended calibration method * Refine document for calibrateCamera() When releasing object points, only the z coordinates of the objectPoints[0].back is fixed. * Add link to strobl2011iccv paper * Improve documentation for calibrateCamera() * Add implementations of wrapping calibrateCamera() * Add checking for params of new calibration method If input parameters are not qualified, then fall back to standard calibration method. * Add camera calibration method of releasing object The current implementation is equal to or better than https://github.com/xoox/calibrel * Update doc for CALIB_RELEASE_OBJECT CALIB_USE_QR or CALIB_USE_LU could be used for faster calibration with potentially less precise and less stable in some rare cases. * Add RELEASE_OBJECT calibration to tutorial code To select the calibration method of releasing object points, a command line parameter `-d=<number>` should be provided. * Update tutorial doc for camera_calibration If the method of releasing object points is merged into OpenCV. It will be expected to be firstly released in 4.1, I think. * Reduce epsilon for cornerSubPix() Epsilon of 0.1 is a bigger one. Preciser corner positions are required with calibration method of releasing object. * Refine camera calibration tutorial The hypothesis coordinates are used to indicate which distance must be measured between two specified object points. * Update sample calibration code method selection Similar to camera_calibration tutorial application, a command line argument `-dt=<number>` is used to select the calibration method. * Add guard to flags of cvCalibrateCamera2() cvCalibrateCamera2() doesn't accept CALIB_RELEASE_OBJECT unless overload interface is added in the future. * Simplify fallback when iFixedPoint is out of range * Refactor projectPoints() to keep compatibilities * Fix arg string "Bad rvecs header" * Read calibration flags from test data files Instead of being hard coded into source file, the calibration flags will be read from test data files. opencv_extra/testdata/cv/cameracalibration/calib?.dat must be sync with the test code. * Add new C interface of cvCalibrateCamera4() With this new added C interface, the extended calibration method with CALIB_RELEASE_OBJECT can be called by C API. * Add regression test of extended calibration method It has been tested with new added test data in xoox:calib-release-object branch of opencv_extra. * Fix assertion in test_cameracalibration.cpp The total number of refined 3D object coordinates is checked. * Add checker for iFixedPoint in cvCalibrateCamera4 If iFixedPoint is out of rational range, fall back to standard method. * Fix documentation for overloaded calibrateCamera() * Remove calibration flag of CALIB_RELEASE_OBJECT The method selection is based on the range of the index of fixed point. For minus values, standard calibration method will be chosen. Values in a rational range will make the object-releasing calibration method selected. * Use new interfaces instead of function overload Existing interfaces are preserved and new interfaces are added. Since most part of the code base are shared, calibrateCamera() is now a wrapper function of calibrateCameraRO(). * Fix exported name of calibrateCameraRO() * Update documentation for calibrateCameraRO() The circumstances where this method is mostly helpful are described. * Add note on the rigidity of the calibration target * Update documentation for calibrateCameraRO() It is clarified that iFixedPoint is used as a switch to select calibration method. If input data are not qualified, exceptions will be thrown instead of fallback scheme. * Clarify iFixedPoint as switch and remove fallback iFixedPoint is now used as a switch for calibration method selection. No fallback scheme is utilized anymore. If the input data are not qualified, exceptions will be thrown. * Add badarg test for object-releasing method * Fix document format of sample list List items of same level should be indented the same way. Otherwise they will be formatted as nested lists by Doxygen. * Add brief intro for objectPoints and imagePoints * Sync tutorial to sample calibration code * Update tutorial compatibility version to 4.0

18 KiB

Camera calibration With OpenCV

Cameras have been around for a long-long time. However, with the introduction of the cheap pinhole cameras in the late 20th century, they became a common occurrence in our everyday life. Unfortunately, this cheapness comes with its price: significant distortion. Luckily, these are constants and with a calibration and some remapping we can correct this. Furthermore, with calibration you may also determine the relation between the camera's natural units (pixels) and the real world units (for example millimeters).

Theory

For the distortion OpenCV takes into account the radial and tangential factors. For the radial factor one uses the following formula:

\f[x_{distorted} = x( 1 + k_1 r^2 + k_2 r^4 + k_3 r^6) \ y_{distorted} = y( 1 + k_1 r^2 + k_2 r^4 + k_3 r^6)\f]

So for an undistorted pixel point at \f$(x,y)\f$ coordinates, its position on the distorted image will be \f$(x_{distorted} y_{distorted})\f$. The presence of the radial distortion manifests in form of the "barrel" or "fish-eye" effect.

Tangential distortion occurs because the image taking lenses are not perfectly parallel to the imaging plane. It can be represented via the formulas:

\f[x_{distorted} = x + [ 2p_1xy + p_2(r^2+2x^2)] \ y_{distorted} = y + [ p_1(r^2+ 2y^2)+ 2p_2xy]\f]

So we have five distortion parameters which in OpenCV are presented as one row matrix with 5 columns:

\f[distortion_coefficients=(k_1 \hspace{10pt} k_2 \hspace{10pt} p_1 \hspace{10pt} p_2 \hspace{10pt} k_3)\f]

Now for the unit conversion we use the following formula:

\f[\left [ \begin{matrix} x \ y \ w \end{matrix} \right ] = \left [ \begin{matrix} f_x & 0 & c_x \ 0 & f_y & c_y \ 0 & 0 & 1 \end{matrix} \right ] \left [ \begin{matrix} X \ Y \ Z \end{matrix} \right ]\f]

Here the presence of \f$w\f$ is explained by the use of homography coordinate system (and \f$w=Z\f$). The unknown parameters are \f$f_x\f$ and \f$f_y\f$ (camera focal lengths) and \f$(c_x, c_y)\f$ which are the optical centers expressed in pixels coordinates. If for both axes a common focal length is used with a given \f$a\f$ aspect ratio (usually 1), then \f$f_y=f_x*a\f$ and in the upper formula we will have a single focal length \f$f\f$. The matrix containing these four parameters is referred to as the camera matrix. While the distortion coefficients are the same regardless of the camera resolutions used, these should be scaled along with the current resolution from the calibrated resolution.

The process of determining these two matrices is the calibration. Calculation of these parameters is done through basic geometrical equations. The equations used depend on the chosen calibrating objects. Currently OpenCV supports three types of objects for calibration:

- Classical black-white chessboard

- Symmetrical circle pattern

- Asymmetrical circle pattern

Basically, you need to take snapshots of these patterns with your camera and let OpenCV find them. Each found pattern results in a new equation. To solve the equation you need at least a predetermined number of pattern snapshots to form a well-posed equation system. This number is higher for the chessboard pattern and less for the circle ones. For example, in theory the chessboard pattern requires at least two snapshots. However, in practice we have a good amount of noise present in our input images, so for good results you will probably need at least 10 good snapshots of the input pattern in different positions.

Goal

The sample application will:

- Determine the distortion matrix

- Determine the camera matrix

- Take input from Camera, Video and Image file list

- Read configuration from XML/YAML file

- Save the results into XML/YAML file

- Calculate re-projection error

Source code

You may also find the source code in the samples/cpp/tutorial_code/calib3d/camera_calibration/

folder of the OpenCV source library or download it from here

. For the usage of the program, run it with -h argument. The program has an

essential argument: the name of its configuration file. If none is given then it will try to open the

one named "default.xml". Here's a sample configuration file

in XML format. In the

configuration file you may choose to use camera as an input, a video file or an image list. If you

opt for the last one, you will need to create a configuration file where you enumerate the images to

use. Here's an example of this .

The important part to remember is that the images need to be specified using the absolute path or

the relative one from your application's working directory. You may find all this in the samples

directory mentioned above.

The application starts up with reading the settings from the configuration file. Although, this is an important part of it, it has nothing to do with the subject of this tutorial: camera calibration. Therefore, I've chosen not to post the code for that part here. Technical background on how to do this you can find in the @ref tutorial_file_input_output_with_xml_yml tutorial.

Explanation

-# Read the settings @snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp file_read

For this I've used simple OpenCV class input operation. After reading the file I've an

additional post-processing function that checks validity of the input. Only if all inputs are

good then *goodInput* variable will be true.

-# Get next input, if it fails or we have enough of them - calibrate

After this we have a big

loop where we do the following operations: get the next image from the image list, camera or

video file. If this fails or we have enough images then we run the calibration process. In case

of image we step out of the loop and otherwise the remaining frames will be undistorted (if the

option is set) via changing from *DETECTION* mode to the *CALIBRATED* one.

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp get_input

For some cameras we may need to flip the input image. Here we do this too.

-# Find the pattern in the current input

The formation of the equations I mentioned above aims

to finding major patterns in the input: in case of the chessboard this are corners of the

squares and for the circles, well, the circles themselves. The position of these will form the

result which will be written into the *pointBuf* vector.

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp find_pattern

Depending on the type of the input pattern you use either the @ref cv::findChessboardCorners or

the @ref cv::findCirclesGrid function. For both of them you pass the current image and the size

of the board and you'll get the positions of the patterns. Furthermore, they return a boolean

variable which states if the pattern was found in the input (we only need to take into account

those images where this is true!).

Then again in case of cameras we only take camera images when an input delay time is passed.

This is done in order to allow user moving the chessboard around and getting different images.

Similar images result in similar equations, and similar equations at the calibration step will

form an ill-posed problem, so the calibration will fail. For square images the positions of the

corners are only approximate. We may improve this by calling the @ref cv::cornerSubPix function.

(`winSize` is used to control the side length of the search window. Its default value is 11.

`winSzie` may be changed by command line parameter `--winSize=<number>`.)

It will produce better calibration result. After this we add a valid inputs result to the

*imagePoints* vector to collect all of the equations into a single container. Finally, for

visualization feedback purposes we will draw the found points on the input image using @ref

cv::findChessboardCorners function.

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp pattern_found

-# Show state and result to the user, plus command line control of the application

This part shows text output on the image.

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp output_text

If we ran calibration and got camera's matrix with the distortion coefficients we may want to

correct the image using @ref cv::undistort function:

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp output_undistorted

Then we show the image and wait for an input key and if this is *u* we toggle the distortion removal,

if it is *g* we start again the detection process, and finally for the *ESC* key we quit the application:

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp await_input

-# Show the distortion removal for the images too

When you work with an image list it is not

possible to remove the distortion inside the loop. Therefore, you must do this after the loop.

Taking advantage of this now I'll expand the @ref cv::undistort function, which is in fact first

calls @ref cv::initUndistortRectifyMap to find transformation matrices and then performs

transformation using @ref cv::remap function. Because, after successful calibration map

calculation needs to be done only once, by using this expanded form you may speed up your

application:

@snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp show_results

The calibration and save

Because the calibration needs to be done only once per camera, it makes sense to save it after a successful calibration. This way later on you can just load these values into your program. Due to this we first make the calibration, and if it succeeds we save the result into an OpenCV style XML or YAML file, depending on the extension you give in the configuration file.

Therefore in the first function we just split up these two processes. Because we want to save many of the calibration variables we'll create these variables here and pass on both of them to the calibration and saving function. Again, I'll not show the saving part as that has little in common with the calibration. Explore the source file in order to find out how and what: @snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp run_and_save We do the calibration with the help of the @ref cv::calibrateCameraRO function. It has the following parameters:

-

The object points. This is a vector of Point3f vector that for each input image describes how should the pattern look. If we have a planar pattern (like a chessboard) then we can simply set all Z coordinates to zero. This is a collection of the points where these important points are present. Because, we use a single pattern for all the input images we can calculate this just once and multiply it for all the other input views. We calculate the corner points with the calcBoardCornerPositions function as: @snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp board_corners And then multiply it as: @code{.cpp} vector<vector > objectPoints(1); calcBoardCornerPositions(s.boardSize, s.squareSize, objectPoints[0], s.calibrationPattern); objectPoints[0][s.boardSize.width - 1].x = objectPoints[0][0].x + grid_width; newObjPoints = objectPoints[0];

objectPoints.resize(imagePoints.size(),objectPoints[0]); @endcode @note If your calibration board is inaccurate, unmeasured, roughly planar targets (Checkerboard patterns on paper using off-the-shelf printers are the most convenient calibration targets and most of them are not accurate enough.), a method from @cite strobl2011iccv can be utilized to dramatically improve the accuracies of the estimated camera intrinsic parameters. This new calibration method will be called if command line parameter

-d=<number>is provided. In the above code snippet,grid_widthis actually the value set by-d=<number>. It's the measured distance between top-left (0, 0, 0) and top-right (s.squareSize*(s.boardSize.width-1), 0, 0) corners of the pattern grid points. It should be measured precisely with rulers or vernier calipers. After calibration, newObjPoints will be updated with refined 3D coordinates of object points. -

The image points. This is a vector of Point2f vector which for each input image contains coordinates of the important points (corners for chessboard and centers of the circles for the circle pattern). We have already collected this from @ref cv::findChessboardCorners or @ref cv::findCirclesGrid function. We just need to pass it on.

-

The size of the image acquired from the camera, video file or the images.

-

The index of the object point to be fixed. We set it to -1 to request standard calibration method. If the new object-releasing method to be used, set it to the index of the top-right corner point of the calibration board grid. See cv::calibrateCameraRO for detailed explanation. @code{.cpp} int iFixedPoint = -1; if (release_object) iFixedPoint = s.boardSize.width - 1; @endcode

-

The camera matrix. If we used the fixed aspect ratio option we need to set \f$f_x\f$: @snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp fixed_aspect

-

The distortion coefficient matrix. Initialize with zero. @code{.cpp} distCoeffs = Mat::zeros(8, 1, CV_64F); @endcode

-

For all the views the function will calculate rotation and translation vectors which transform the object points (given in the model coordinate space) to the image points (given in the world coordinate space). The 7-th and 8-th parameters are the output vector of matrices containing in the i-th position the rotation and translation vector for the i-th object point to the i-th image point.

-

The updated output vector of calibration pattern points. This parameter is ignored with standard calibration method.

-

The final argument is the flag. You need to specify here options like fix the aspect ratio for the focal length, assume zero tangential distortion or to fix the principal point. Here we use CALIB_USE_LU to get faster calibration speed. @code{.cpp} rms = calibrateCameraRO(objectPoints, imagePoints, imageSize, iFixedPoint, cameraMatrix, distCoeffs, rvecs, tvecs, newObjPoints, s.flag | CALIB_USE_LU); @endcode

-

The function returns the average re-projection error. This number gives a good estimation of precision of the found parameters. This should be as close to zero as possible. Given the intrinsic, distortion, rotation and translation matrices we may calculate the error for one view by using the @ref cv::projectPoints to first transform the object point to image point. Then we calculate the absolute norm between what we got with our transformation and the corner/circle finding algorithm. To find the average error we calculate the arithmetical mean of the errors calculated for all the calibration images. @snippet samples/cpp/tutorial_code/calib3d/camera_calibration/camera_calibration.cpp compute_errors

Results

Let there be this input chessboard pattern which has a size of 9 X 6. I've used an

AXIS IP camera to create a couple of snapshots of the board and saved it into VID5 directory. I've

put this inside the images/CameraCalibration folder of my working directory and created the

following VID5.XML file that describes which images to use:

@code{.xml}

<opencv_storage>

images/CameraCalibration/VID5/xx1.jpg

images/CameraCalibration/VID5/xx2.jpg

images/CameraCalibration/VID5/xx3.jpg

images/CameraCalibration/VID5/xx4.jpg

images/CameraCalibration/VID5/xx5.jpg

images/CameraCalibration/VID5/xx6.jpg

images/CameraCalibration/VID5/xx7.jpg

images/CameraCalibration/VID5/xx8.jpg

</opencv_storage>

@endcode

Then passed images/CameraCalibration/VID5/VID5.XML as an input in the configuration file. Here's a

chessboard pattern found during the runtime of the application:

After applying the distortion removal we get:

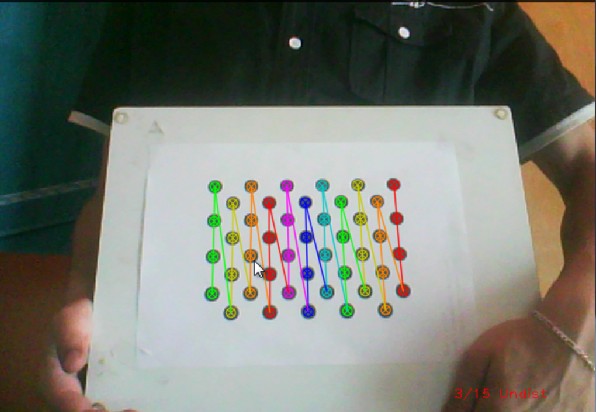

The same works for this asymmetrical circle pattern by setting the input width to 4 and height to 11. This time I've used a live camera feed by specifying its ID ("1") for the input. Here's, how a detected pattern should look:

In both cases in the specified output XML/YAML file you'll find the camera and distortion coefficients matrices: @code{.xml} <camera_matrix type_id="opencv-matrix"> 3 3

You may observe a runtime instance of this on the YouTube here.

@youtube{ViPN810E0SU}