OCL_FP16 MatMul with large batch

* Workaround FP16 MatMul with large batch

* Fix OCL reinitialization

* Higher thresholds for INT8 quantization

* Try fix gemm_buffer_NT for half (columns)

* Fix GEMM by rows

* Add batch dimension to InnerProduct layer test

* Fix Test_ONNX_conformance.Layer_Test/test_basic_conv_with_padding

* Batch 16

* Replace all vload4

* Version suffix for MobileNetSSD_deploy Caffe model

dnn: cleanup of tengine backend #24122🚀 Cleanup for OpenCV 5.0. Tengine backend is added for convolution layer speedup on ARM CPUs, but it is not maintained and the convolution layer on our default backend has reached similar performance to that of Tengine.

Tengine backend related PRs:

- https://github.com/opencv/opencv/pull/16724

- https://github.com/opencv/opencv/pull/18323

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Invalid memory access fix for ONNX split layer parser #24076#24101

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work https://github.com/opencv/opencv/issues/24076

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

TFLite models on different backends (tests and improvements) #24039

### Pull Request Readiness Checklist

* MaxUnpooling with OpenVINO

* Fully connected with transposed inputs/weights with OpenVINO

* Enable backends tests for TFLite (related to https://github.com/opencv/opencv/issues/23992#issuecomment-1640691722)

* Increase existing tests thresholds

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Resolve uncovered CUDA dnn layer #24080

### Pull Request Readiness Checklist

* Gelu activation layer on CUDA

* Try to relax GEMM from ONNX

resolves https://github.com/opencv/opencv/issues/24064

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Remove legacy nGraph logic #24072

### Pull Request Readiness Checklist

TODO:

- [x] Test with OpenVINO 2021.4 (tested locally)

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

DetectionOutput layer on OpenVINO without limitations #24069

### Pull Request Readiness Checklist

required for https://github.com/opencv/opencv/pull/23987

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

PReLU with element-wise scales #24056

### Pull Request Readiness Checklist

resolves https://github.com/opencv/opencv/issues/24051

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Update opencv dnn to support cann version >=6.3 #23936

1.modify the search path of "libopsproto.so" in OpenCVFindCANN.cmake

2.add the search path of "libgraph_base.so" in OpenCVFindCANN.cmake

3.automatic check Ascend socVersion,and test on Ascend310/Ascend310B/Ascend910B well

[TFLite] Pack layer and other fixes for SSD from Keras #24004

### Pull Request Readiness Checklist

resolves https://github.com/opencv/opencv/issues/23992

**Merge with extra**: https://github.com/opencv/opencv_extra/pull/1076

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

DNN: optimize the speed of general Depth-wise #23952

Try to solve the issue: https://github.com/opencv/opencv/issues/23941

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

dnn: disable warning when loading a fp16 model #23853

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

Build Java without ANT #23724

### Pull Request Readiness Checklist

Enables a path of building Java bindings without ANT

* Able to build OpenCV JAR and Docs without ANT

```

-- Java:

-- ant: NO

-- JNI: /usr/lib/jvm/default-java/include /usr/lib/jvm/default-java/include/linux /usr/lib/jvm/default-java/include

-- Java wrappers: YES

-- Java tests: NO

```

* Possible to build OpenCV JAR without ANT but tests still require ANT

**Merge with**: https://github.com/opencv/opencv_contrib/pull/3502

Notes:

- Use `OPENCV_JAVA_IGNORE_ANT=1` to force "Java" flow for building Java bindings

- Java tests still require Apache ANT

- JAR doesn't include `.java` source code files.

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

DNN: fix bug for X86 Winograd #23763

Address https://github.com/opencv/opencv/issues/23760

The patch aims to add a runtime check for X86 platform without AVX(2).

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

Assertion Fix in Split Layer #23746

### Pull Request Readiness Checklist

This PR fixes issue mentioned in [#23663](https://github.com/opencv/opencv/issues/23663)

Merge with https://github.com/opencv/opencv_extra/pull/1067

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Support ONNX operator QLinearSoftmax in dnn #23655

Resolves https://github.com/opencv/opencv/issues/23636.

Merge with https://github.com/opencv/opencv_extra/pull/1064.

This PR maps the QLinearSoftmax (from com.microsoft domain) to SoftmaxInt8 in dnn along with some speed optimization.

Todo:

- [x] support QLinearSoftmax with opset = 13

- [x] add model and test data for QLinearSoftmax with opset = 13

- [x] ensure all models have dims >= 3.

- [x] add the script to generate model and test data

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

/build/build_cuda/3p/opencv/linux-x64/ubuntu22.04/Debug/modules/dnn/src/layers/cpu_kernels/convolution.cpp: In function 'void cv::dnn::packData8(char*&, float*&, int&, int&, int&, const int*, int, int, int)':

/build/build_cuda/3p/opencv/linux-x64/ubuntu22.04/Debug/modules/dnn/src/layers/cpu_kernels/convolution.cpp:448:43: error: 'CONV_NR' was not declared in this scope; did you mean 'CONV_3D'?

448 | vx_store(inpbufC_FP32 + k*CONV_NR, vx_load(inptrInC + k1));

| ^~~~~~~

| CONV_3D

LSTM ONNX Layout Attribute Support #23614

### Explanation

This PR contains necessary changes to support `layout` attribute. This attributes is present in [ONNX](https://github.com/onnx/onnx/blob/main/docs/Operators.md#lstm) and [Torch](https://pytorch.org/docs/stable/generated/torch.nn.LSTM.html#lstm) (in touch it is name as `batch_first=True`) libraries. When `layout = 1` input to LSTM layer is expected to have batch dimension first -> `[batch_size, sequence_length, features]` vs `layout = 0` - default `[sequence_length, batch_size, features]`

### Test Data

Test data and data generator for PR located here [#1063](https://github.com/opencv/opencv_extra/pull/1063)

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Build DNN without Protobuf

DNN module can be built without Protobuf for Darknet, TFLite, OpenVINO, Torch (not PyTorch) models.

```

cmake \

-DCMAKE_BUILD_TYPE=Release \

-DBUILD_LIST=dnn \

-DWITH_PROTOBUF=OFF \

-DWITH_OPENCL=OFF

7.1M lib/libopencv_dnn.so.4.7.0

```

```

cmake \

-DCMAKE_BUILD_TYPE=Release \

-DBUILD_LIST=dnn \

-DWITH_OPENCL=OFF

3.9M lib/libopencv_dnn.so.4.7.0

```

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

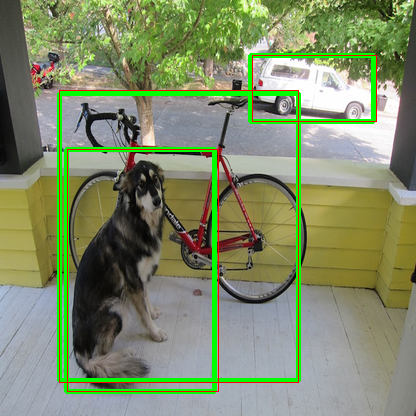

Import and inference INT8 quantized TFLite model #23409

### Pull Request Readiness Checklist

* Support quantized TFLite models

* Enable fused activations (FP32, INT8)

**Merge with extra**: https://github.com/opencv/opencv_extra/pull/1048

on the image, green boxes are from TFLite and red boxes from OpenCV

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Fix ONNX parser for single-layer LSTM hidden and cell states #23475

### Fix ONNX parser for single-layer LSTM hidden and cell states

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

This PR addresses #21118 [issue](https://github.com/opencv/opencv/issues/21118). The problem is that the ONNX parser is unable to read the hidden state and cell state for single-layer LSTMs. This PR fixes the issue by updating the parser to correctly read hidden and cell states.

DNN: Add New API blobFromImageParam #22750

The purpose of this PR:

1. Add new API `blobFromImageParam` to extend `blobFromImage` API. It can support the different data layout (NCHW or NHWC), and letter_box.

2. ~~`blobFromImage` can output `CV_16F`~~

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [ ] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [ ] The feature is well documented and sample code can be built with the project CMake

dnn: Support more operators in CANN backend #23401

This PR adds the support of following layers:

- [x] Sub

- [x] PRelu

- [x] DeConv

- [x] Also warn users if backend is switched back to default if some of the layers are not supported.

- [ ] [Dropped] LSTM: some hacks (adding layers) were introduced which makes it even harder to build the graph for CANN backend.

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Added LSTM and GRU tests for various batch and input length sizes #23501

Added tests with various sequence length and batch sizes

Test data: https://github.com/opencv/opencv_extra/pull/1057

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [ ] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Fix identifying initializers in ONNX graph simplification #23296

Fixes https://github.com/opencv/opencv/issues/23295

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

Propagate inputs info for ONNX and TFLite models

### Pull Request Readiness Checklist

Needed for generic applications such as benchmarking pipelines. So OpenCV can tell about the default input shapes specified in the models.

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

4 failed tests in open_test_dnn listed below:

* Test_Caffe_layers.Conv_Elu/0, where GetParam() = OCV/CPU

* Test_ONNX_layers.ConvResizePool1d/0, where GetParam() = OCV/CPU

* Test_TensorFlow_layers.tf_reshape_nhwc/0, where GetParam() = OCV/CPU

* Test_Torch_layers.net_inception_block/0, where GetParam() = OCV/CPU

In winofunc_AtXA_8x8_f32 and winofunc_BtXB_8x8_f32

implementation, incorrect input parameters cause tests failure.

Add four new different variables for the last four input parameters of

v_transpose4x4 to fix bugs, and update related comments.

Signed-off-by: tingbo.liao <tingbo.liao@starfivetech.com>

Related issue: https://github.com/opencv/opencv_zoo/issues/136

Features added:

- Support operators with multiple output: ONNX Split.

- Support Slice without steps.

Bugs fixed:

- Wrong settings in ClipByValue (Relu6).

- Wrong calculation of pads in convolution layer (It is wrong generally but only fixed specifically for CANN for now).

### Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

- [x] I agree to contribute to the project under Apache 2 License.

- [x] To the best of my knowledge, the proposed patch is not based on a code under GPL or another license that is incompatible with OpenCV

- [x] The PR is proposed to the proper branch

- [x] There is a reference to the original bug report and related work

- [x] There is accuracy test, performance test and test data in opencv_extra repository, if applicable

Patch to opencv_extra has the same branch name.

- [x] The feature is well documented and sample code can be built with the project CMake

dnn: add layer normalization for vision transformers

* add layer norm onnx parser, impl and tests

* add onnx graph simplifier for layer norm expanded

* handle the case when constants are of type Initializer

* add test case for layer norm expanded with initializers

* use CV_Assert & CV_CheckType in place of CV_Assert_N; use forward_fallback for OCL_FP16

* use const ref / ref in parameters of invoker::run; extract inner const if from nested loop; use size_t in place of ull

* template hasBias

* remove trailing whitespace

* use pointer parameter with null check; move normSize division & mean_square division outside of loop; use std::max to ensure positive value before std::sqrt

* refactor implementation, optimize parallel_for

* disable layer norm expanded

* remove the removal of layer norm optional outputs

Switch to new OpenVINO API after 2022.1 release

* Pass Layer_Test_Convolution_DLDT.Accuracy/0 test

* Pass test Test_Caffe_layers.Softmax

* Failed 136 tests

* Fix Concat. Failed 120 tests

* Custom nGraph ops. 19 failed tests

* Set and get properties from Core

* Read model from buffer

* Change MaxPooling layer output names. Restore reshape

* Cosmetic changes

* Cosmetic changes

* Override getOutputsInfo

* Fixes for OpenVINO < 2022.1

* Async inference for 2021.4 and less

* Compile model with config

* Fix serialize for 2022.1

* Asynchronous inference with 2022.1

* Handle 1d outputs

* Work with model with dynamic output shape

* Fixes with 1d output for old API

* Control outputs by nGraph function for all OpenVINO versions

* Refer inputs in PrePostProcessor by indices

* Fix cycled dependency between InfEngineNgraphNode and InfEngineNgraphNet.

Add InferRequest callback only for async inference. Do not capture InferRequest object.

* Fix tests thresholds

* Fix HETERO:GPU,CPU plugin issues with unsupported layer

This change replaces references to a number of deprecated NumPy

type aliases (np.bool, np.int, np.float, np.complex, np.object,

np.str) with their recommended replacement (bool, int, float,

complex, object, str).

Those types were deprecated in 1.20 and are removed in 1.24,

cf https://github.com/numpy/numpy/pull/22607.

* cann backend impl v1

* cann backend impl v2: use opencv parsers to build models for cann

* adjust fc according to the new transA and transB

* put cann net in cann backend node and reuse forwardLayer

* use fork() to create a child process and compile cann model

* remove legacy code

* remove debug code

* fall bcak to CPU backend if there is one layer not supoorted by CANN backend

* fix netInput forward

DNN: reduce the memory used in convolution layer

* reduce the memory in winograd and disabel the test when usage memory is larger than 2gb.

* remove VERY_LOG tag

DNN: let Quant and Dequant of ONNX_importer support the Constant input.

* let Quant and Dequant support the Constant input.

* fix negative value of axis.