Intro

The Mount Remote Storage feature can mount one folder in a remote object store bucket.

However,

- You may have multiple buckets in one or more cloud storages.

- You may want to create new buckets or remove old buckets.

How to synchronize them automatically?

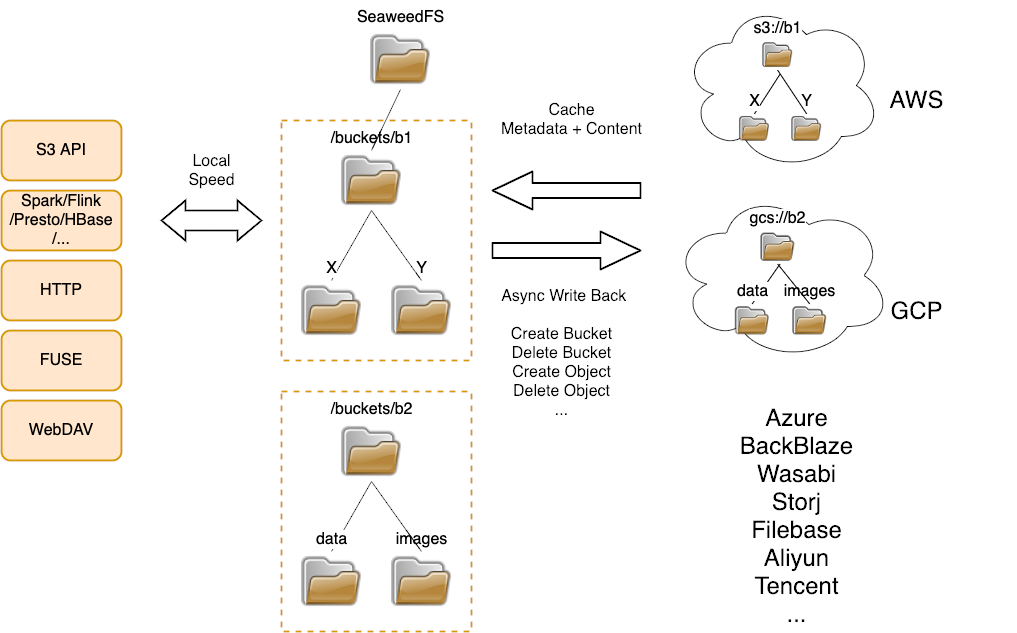

Design of Gateway to Remote Object Store

The weed filer.remote.gateway -createBucketAt=cloud1 process can mirror local changes to remote object storage, i.e.:

- Local new buckets will be automatically mounted to the remote storage specified in

-createBucketAt - Local deleted buckets will be automatically deleted in its remote storage.

- All local changes under the mounted buckets are uploaded to its remote storage.

Assumption

One or more cloud storages have been configured following Configure Remote Storage .

Setup Steps

1. (Optional) Mount all existing remote buckets as local buckets

If there are some existing buckets, run this to mount all of them as local buckets and synchronize their metadata to the local SeaweedFS cluster.

# in "weed shell"

> remote.mount.buckets -remote=cloud1 -apply

2. Upload local changes in /buckets

Start a weed filer.remote.gateway and let it run continuously.

If new buckets are created locally, this will also automatically create new buckets in the specified remote storage.

It is OK to pause it, and resume.

It is also OK to change the -createBucketAt=xxx value to a different one, since it only affects new bucket creation.

$ weed filer.remote.gateway -createBucketAt=cloud1

synchronize /buckets, default new bucket creation in cloud1 ...

In some cloud storage vendor, the bucket names need to be unique. To address this, run it with -createBucketWithRandomSuffix option.

It will create buckets with name as localBucketName-xxxx, appending a random number as the suffix.

$ weed filer.remote.gateway -createBucketAt=cloud1 -createBucketWithRandomSuffix

3. (Optional) Cache or uncache

Run these commands as needed to speed up access or to release disk spaces.

The basic implementation mechanism is the same as other mounted folders. You may need to create a cronjob to run it periodically.

# in "weed shell"

# cache all pdf files in all mounted buckets

> remote.cache -include=*.pdf

# cache all pdf files in a bucket

> remote.cache -dir=/buckets/some-bucket -include=*.pdf

# uncache all files older than 1 hour and larger than 10KB

> remote.uncache -minAge=3600 -minSize=10240

Introduction

API

Configuration

- Replication

- Store file with a Time To Live

- Failover Master Server

- Erasure coding for warm storage

- Server Startup Setup

- Environment Variables

Filer

- Filer Setup

- Directories and Files

- Data Structure for Large Files

- Filer Data Encryption

- Filer Commands and Operations

- Filer JWT Use

Filer Stores

- Filer Cassandra Setup

- Filer Redis Setup

- Super Large Directories

- Path-Specific Filer Store

- Choosing a Filer Store

- Customize Filer Store

Advanced Filer Configurations

- Migrate to Filer Store

- Add New Filer Store

- Filer Store Replication

- Filer Active Active cross cluster continuous synchronization

- Filer as a Key-Large-Value Store

- Path Specific Configuration

- Filer Change Data Capture

FUSE Mount

WebDAV

Cloud Drive

- Cloud Drive Benefits

- Cloud Drive Architecture

- Configure Remote Storage

- Mount Remote Storage

- Cache Remote Storage

- Cloud Drive Quick Setup

- Gateway to Remote Object Storage

AWS S3 API

- Amazon S3 API

- AWS CLI with SeaweedFS

- s3cmd with SeaweedFS

- rclone with SeaweedFS

- restic with SeaweedFS

- nodejs with Seaweed S3

- S3 API Benchmark

- S3 API FAQ

- S3 Bucket Quota

- S3 API Audit log

- S3 Nginx Proxy

- Docker Compose for S3

AWS IAM

Machine Learning

HDFS

- Hadoop Compatible File System

- run Spark on SeaweedFS

- run HBase on SeaweedFS

- run Presto on SeaweedFS

- Hadoop Benchmark

- HDFS via S3 connector

Replication and Backup

- Async Replication to another Filer [Deprecated]

- Async Backup

- Async Filer Metadata Backup

- Async Replication to Cloud [Deprecated]

- Kubernetes Backups and Recovery with K8up

Metadata Change Events

Messaging

Use Cases

Operations

Advanced

- Large File Handling

- Optimization

- Volume Management

- Tiered Storage

- Cloud Tier

- Cloud Monitoring

- Load Command Line Options from a file

- SRV Service Discovery

- Volume Files Structure